What We Learned Building an AI-Native Company (It Wasn't About the AI)

Written by Will Jung, Chief Technology Officer, nCino

At nCino, our goal is to build a product and platform that serves customers in an increasingly AI-native world. Which meant we had to become AI-native first.

But we quickly realized "AI-native" isn't about the technology. It's not about using AI for every task, every role, every process. It's not about everyone becoming an expert in probability theory, neural networks, and transformers.

It's about solving problems, generating context, and investing in people—with AI as the accelerant, not the answer.

As we experimented with and embedded these technologies into our processes and products, we grappled with these questions. Some experiments succeeded, others failed. But the lesson became clear: if your company is trying to become AI-native, three things matter more than the technology itself—problems, context, and people.

What is the problem?

It's becoming more of an imperative to be clear and focused on the problems that you are solving as a company. Human problems remain human problems. Technology is built to solve human problems. And human problems at its core does not change. The need for purpose, security, and peace is and always will be a constant.

At nCino, this belief, this need, was a reminder to focus on the problems we have chosen to solve as a company. That's all strategy really is. Fundamentally, what choices are we making? What human problems are we solving that we believe we can solve better than anyone?

As AI tools like ChatGPT and Sora have become more accessible, AI-generated outputs have begun to look and sound the same. The whitespace with a chatbox in the middle. The side toggle bar. The gradient purple-to-blue background with floating geometric shapes. LinkedIn posts that all start with a one-sentence paragraph for a "hook." From thought leadership articles that recycle the same takes on "the future of work" to illustrations featuring the same metaphors (lightbulbs for ideas, puzzle pieces for collaboration), everything is becoming an echo chamber of the past, lacking in originality.

You can actually tell when something (be it writing, image, a digital experience) has been thought through with the ingenuity of a human mind, or if it's been generated by a machine but empty at its core. It's a great irony that we're in the middle of the next great wave of technological revolution, yet so many of us are simply skating to the mean.

Becoming AI-native doesn't actually start with the technology. There will always be technological advancement. But what doesn't change is just as important. What's the constant problem that we are going to always be solving for that does not change regardless of the technology? For nCino, our vision is to partner with financial institutions to create a world where intelligent banking fuels dreams, builds communities and drives prosperity.

Creating experiences that remove friction, anticipate needs, and exceed expectations for customers and members of financial institutions requires us to understand the "why?" Why, despite all our technological advancements, do customers at financial institutions still face friction in lending? Product silos. Documents after documents. Customers treated as transactions rather than relationships. The same "digital transformation" playbook recycled for 15-20 years.

An example from nCino: In commercial banking, most of a banker's time goes to portfolio management—repetitive tasks reviewing documents and data. The macro problem isn't helping banks become better at this work. It's helping their customers navigate the risks and complexities of credit.

We didn't ask, "what can AI do?" We asked, "what's limiting our customers' ability to grow and scale?" The answer: banks need to identify portfolio risks early and act on them faster.

That led to our Continuous Credit Monitoring solution. It embeds AI to proactively spot risks that matter—ECL early warning indicators, covenant testing, relationship risk review, credit health monitoring—and surfaces them for analysts to act on. Intelligence augmenting human expertise, grounded in data. Tools purpose-built for the problem, not the model.

We first anchor our AI use cases in the metric we're moving, not the model we're using.

Content without context is just noise.

According to Andrej Karpathy, an AI researcher focused on modernizing education in the age of AI, believes that we are entering “the decade of the agent.” Karpathy believes we’re at the very beginning of a major shift in how we interact with artificial intelligence, moving beyond simple chatbots and copilots to more autonomous and powerful AI agents. In this new era, simply having data is not enough. Data without context is just noise, and this distinction is even more critical.

Think about your own experience with AI tools. Your first interaction with Claude was probably a generic question—maybe "What are the key trends in fintech?" or "Write a summary of this quarterly report." The output was coherent but generic. But when you provided context—"You are a credit risk analyst at a regional bank evaluating a $2M commercial real estate loan for a medical office building. The borrower has strong financials but the property is in a secondary market"—suddenly the output became useful. That's not the AI getting smarter; that's context transforming noise into signal.

In banking, where precision, compliance, and risk management are non-negotiable, context is everything. A $500,000 loan application means something completely different for a community bank in rural Kansas versus a commercial bank underwriting corporate real estate in Manhattan. The data might be similar—income statements, credit scores, collateral valuations—but without the context of the institution's risk appetite, regulatory environment, portfolio composition, and market position, that data is just noise.

The challenge we face as an industry is that most organizations are trying to build agentic systems on top of generic platforms with generic data. They're essentially asking their AI agents to be forgetful workers — showing up every day with vast general knowledge but no institutional memory, no understanding of their specific business, and no context about what "good" looks like for their organization.

General context isn't enough for banking. A generic AI agent might know that banks issue loans. But it doesn't know:

How YOUR bank's credit committee makes decisions

What YOUR institution's risk appetite looks like in practice

How YOUR customers behave across economic cycles

What exceptions to credit policy are commonly approved vs. rejected

How YOUR operational workflows actually function day-to-day

This institutional context cannot be prompt-engineered. It can't be memorized from a few examples. It has to come from actual operational data — thousands of loans, hundreds of credit decisions, years of customer relationships, real portfolio performance data.

When an LLM processes a request, here's what goes into that context window:

Instructions/System Prompt — This is the agent's identity and core guidance. "You are a commercial credit analyst for a mid-sized regional bank..." This sets the frame.

Available Tools — What can the agent actually do? Can it query databases? Run calculations? Access external APIs? The tools define the agent's capabilities

Retrieved Information — This is RAG in action. Based on the current task, what relevant information should be pulled in? Historical credit decisions? Similar loan structures? Regulatory guidance? Performance data from comparable portfolios?

Long-term Memory — What does the agent know about this customer, this relationship, this project from past interactions? In banking, this might be years of relationship history.

State/History (Short-term memory) — What's happened in this specific conversation or workflow? This maintains coherence across multi-step processes.

User Prompt — The actual request or task at hand.

Structured Output Requirements — How should the agent format its response? This ensures consistency and enables downstream processing.

Now here's the critical point: every single one of these context elements needs to be populated with relevant, accurate, domain-specific information.

For banking agents, this means:

Instructions need to reflect actual institutional policies, not generic banking knowledge

Tools need to connect to real banking systems - core banking, credit bureaus, document repositories, pricing engines

Retrieved information needs to come from your institution's actual data - real credit decisions, real performance data, real customer interactions

Memory needs to understand banking relationships - not just customer data, but the full context of how that customer does business with you

Structured outputs need to match regulatory requirements and operational workflows

This is why context engineering is fundamentally different from prompt engineering. Prompt engineering is about crafting the right question. Context engineering is about building the entire information architecture that makes intelligent responses possible.

And this is where most organizations are struggling. They have data trapped in silos. They have institutional knowledge locked in people's heads. They have workflows that have evolved organically over decades. Translating all of that into context that an agent can use is not a prompt engineering problem - it's a data engineering problem, a knowledge management problem, and fundamentally a business architecture problem.

People will always matter.

I hope you've noticed a trend so far. I've written almost 1,500 words about building an AI-native nCino and I've still yet to mention what AI tools and systems we're using (I will touch on that). Because what we have learned these past two years is that the fundamentals matter more than the technology.

AI is a force multiplier. AI doesn't fix broken fundamentals. It will multiply your current foundation. If your organization has a prioritization problem, AI will only amplify the chaos. If your data is in silos and not easily accessible, AI will lack the proper context to be useful.

And the last fundamental (maybe it should've been the first) is that people will always matter. You need humans at the helm. Every organization is created to build for human problems. Which means diversity of human experiences, skills and perspectives are critical. Creating a culture that leverages the best of humanity is what we believe will make a true AI-native company.

Amplify the human.

IBM Training Manual from 1979.

AI does not have a soul. It has no empathy, no accountability or morals, no critical thinking, no governance and no ethics—all of which are uniquely human qualities that are critical for companies. These will become your differentiators.

Because at the end of the day, banking is inherently an emotional journey. It’s what people want and need when buying their family home, following their dreams and passions, growing their business, and preparing for their future. And people are looking to banks, credit unions and financial institutions of all sizes to give them the trust and accountability to help them achieve their goals. True relationship banking.

The banking and financial services industry has the opportunity now to deliver what we have been promising for so long—true personalised banking.

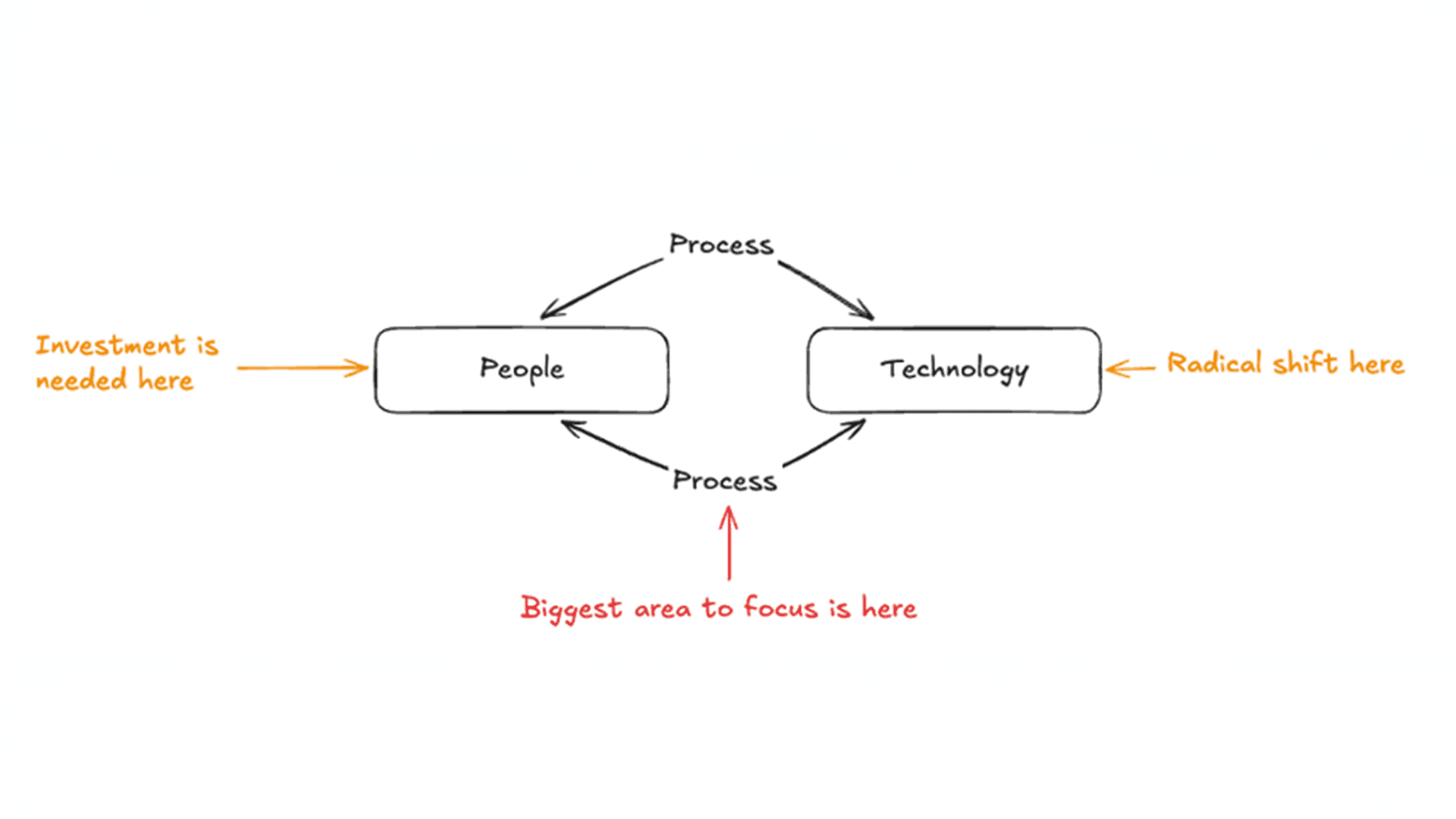

This means that, as a trusted financial institution, you must rethink your organisation. What skills do you need? How do you structure your organisation around real problems? What changes are required in your ways of working, from strategy to execution? These are all organizational questions to ask, and human problems to solve.

You need to invest in your people. The technology has shifted radically but if you don’t focus on your process, how you produce outcomes won’t change. In other words, if you keep working the way you were prior to AI, then you will not see the outcomes the technology has promised you.

At nCino, this meant that we had to focus first and foremost on our culture of innovation. How do we encourage bottom-up innovation? Through executive sponsorship, autonomy and tooling. Everyone is encouraged to challenge their current workflows and processes. Do we need this multi-step process? If so, what can we do to automate it? We have introduced weekly “Innovation Friday” sessions, where teammates in all divisions across Sales, Customer Success, Product, Engineering share their learnings, wins and failures.

We’ve rolled out Claude for Enterprise across our 1400+ teammates. We’ve seen Claude enable up to 30% improvements on coding, become our default assistant for data analysis and content creation, and used heavily to build AI-embedded features within our product. The deployment emphasized change management through executive sponsorship, grassroots champions, and continuous learning resources rather than mandated usage, resulting in Claude becoming a core productivity tool with 87% of employees having accessed it within six months.

Closing thoughts

Regardless of where you sit on the AI spectrum, one thing is clear—it has already embedded itself across society. AI will become so pervasive that it will be seamless, invisible, ingrained in how we live.

Which means the companies that win won't be the ones with the best models. They'll be the ones who solved the right problems.

So, this is our learning:

Focus on your problems, not the technology. Your strategy matters more than your stack.

Design systems with context. Your fundamental software, data engineering and architecture principles still matter—maybe more than ever.

Invest in your people. Re-wire your organization around how you prioritize, how you work, how you decide. Let technology handle the routine. Keep humans for the weird stuff—the hard, complex, intuitive judgment calls that actually move your business forward.

The technology works. Not perfectly, but well enough. The economics work. The window to move is now—not because of technology acceleration, but because everyone else is moving at a frantic pace.

If you move with focus, clear problems, systems designed for context, and investment in your people—you don't just adopt AI. You become an AI-native company. And that transformation might be the only one that matters.